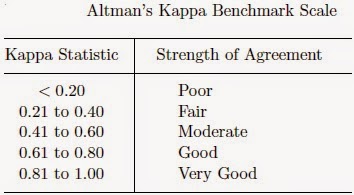

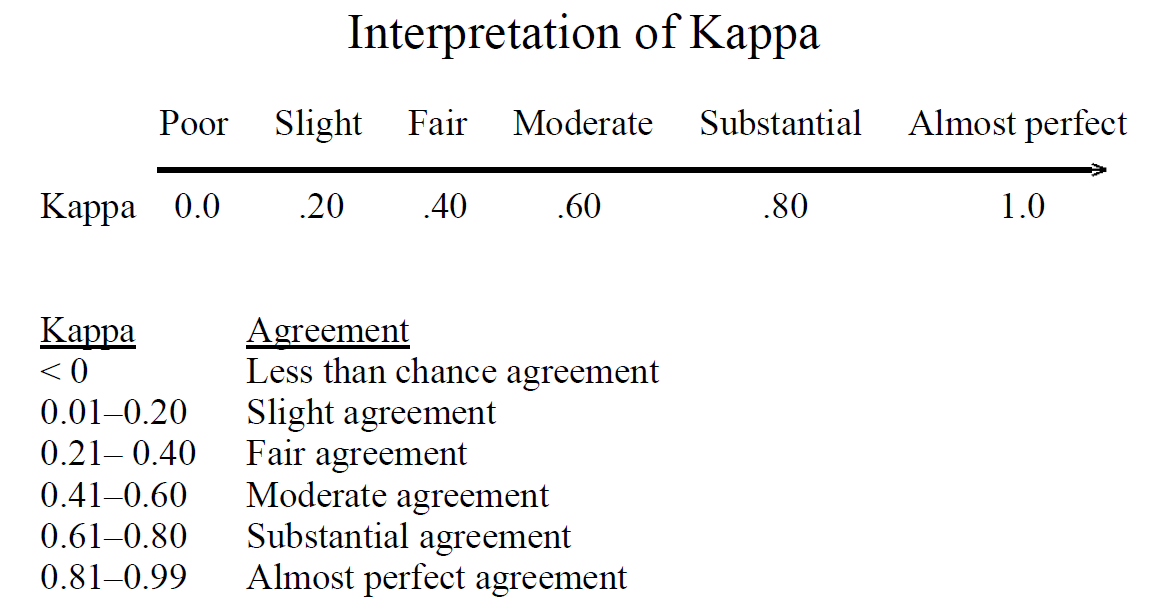

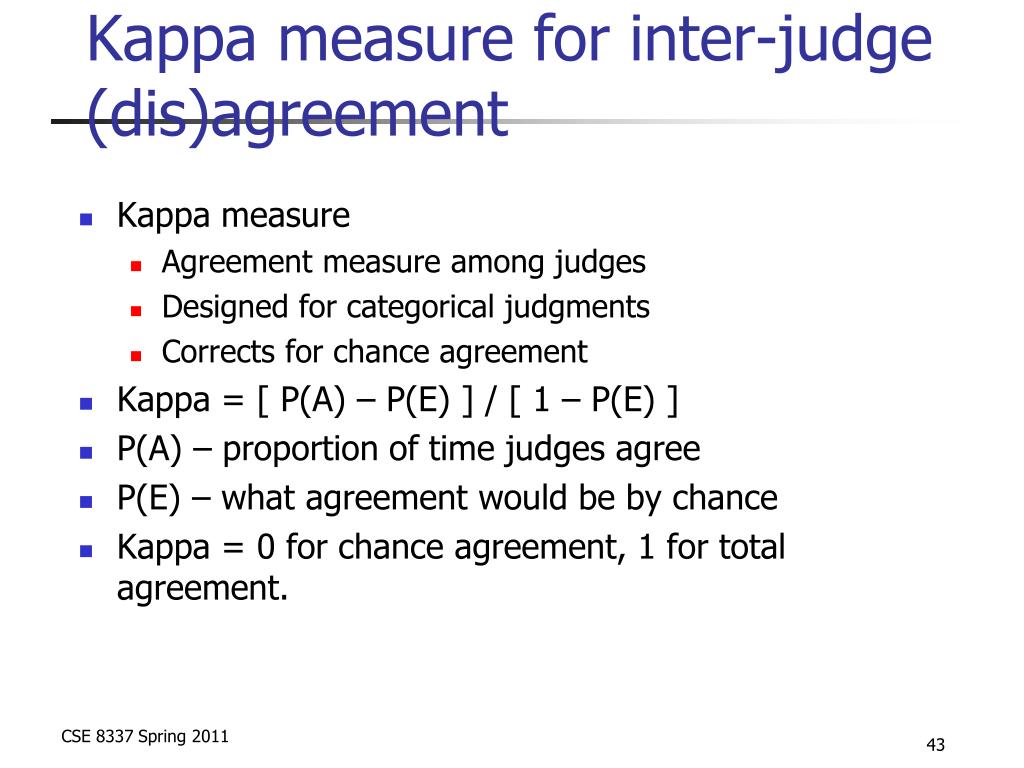

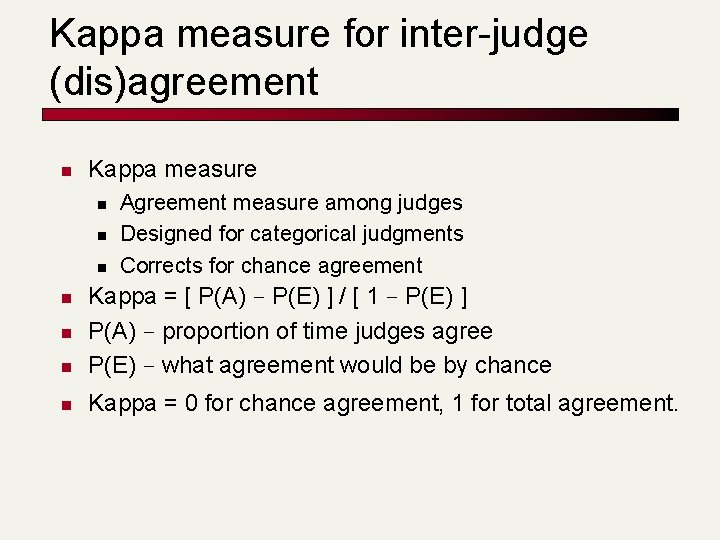

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

Interrater agreement and interrater reliability: Key concepts, approaches, and applications - ScienceDirect

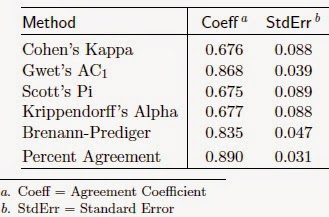

K. Gwet's Inter-Rater Reliability Blog : 2014Inter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

K. Gwet's Inter-Rater Reliability Blog : 2014Inter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

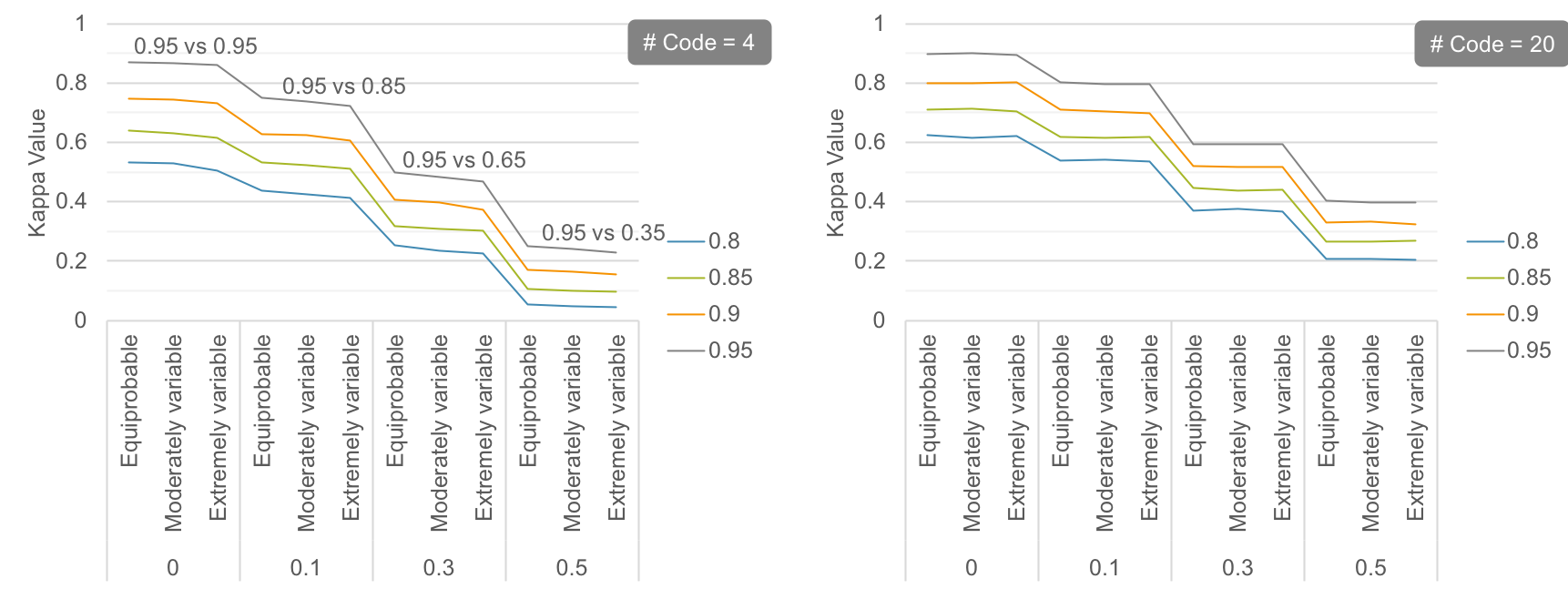

Kappa statistics to measure interrater and intrarater agreement for 1790 cervical biopsy specimens among twelve pathologists: Qualitative histopathologic analysis and methodologic issues - Gynecologic Oncology

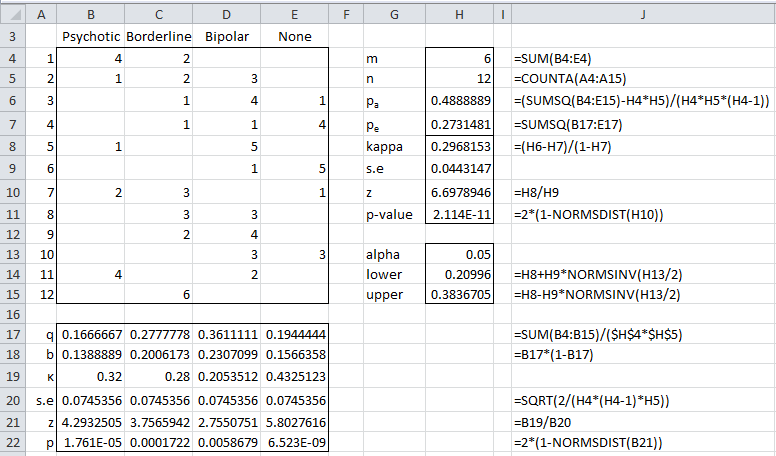

![PDF] Interrater reliability: the kappa statistic | Semantic Scholar PDF] Interrater reliability: the kappa statistic | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/bf3a7271860b1667e3ceb84e5bc400d2635ff8b7/3-Table1-1.png)

![PDF] Interrater reliability: the kappa statistic | Semantic Scholar PDF] Interrater reliability: the kappa statistic | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/bf3a7271860b1667e3ceb84e5bc400d2635ff8b7/3-Table2-1.png)